publications

Publications in reversed chronological order

2026

-

Adaptive Q-law Control for Closed-loop Electric Propulsion Orbit TransferSuraj Kumar, Sri Aditya Deevi, Aditya Rallapalli , and 1 more authorAccepted in American Institute of Aeronautics and Astronautics (AIAA) Scitech Forum, Jan 2026

Adaptive Q-law Control for Closed-loop Electric Propulsion Orbit TransferSuraj Kumar, Sri Aditya Deevi, Aditya Rallapalli , and 1 more authorAccepted in American Institute of Aeronautics and Astronautics (AIAA) Scitech Forum, Jan 2026This paper proposes an adaptive Q-law controller for closed-loop low-thrust, many-revolution orbit transfers. A Lyapunov-based modification of the classical Q-law controller is introduced to ensure closed-loop stability and real-time implementability for GTO-GEO transfer. To optimize the control gains for minimum-time transfers, a novel simulation-based two-stage hybrid optimization framework is developed. Unlike the classical Q-law with fixed control gains, the proposed approach makes the control gains explicitly state- and time-dependent by parameterizing them with a neural network. In the first, offline stage, Sobol-sequenced random samples of the network weights and their corresponding orbit transfer times are used to compute the empirical mean and a regularized covariance of the top performers. This distribution then warm-starts the gradient-free Covariance Matrix Adaptation Evolution Strategy (CMA-ES) optimizer, directing its search toward the most promising regions. The second, online stage employs an optimization loop, where the archive-seeded CMA-ES iteratively samples candidate network weights, evaluates them on the high-fidelity simulator, and updates its search distribution until convergence. Finally, the optimized neural controller is distilled into a polynomial function approximator for onboard implementation.

2025

-

Efficient Self-Supervised Neural Architecture SearchSri Aditya Deevi , Asish Kumar Mishra, Deepak Mishra , and 3 more authorsProceedings of International Conference on Ubiquitous Information Management and Communication (IMCOM), Feb 2025

Efficient Self-Supervised Neural Architecture SearchSri Aditya Deevi , Asish Kumar Mishra, Deepak Mishra , and 3 more authorsProceedings of International Conference on Ubiquitous Information Management and Communication (IMCOM), Feb 2025Deep Neural Networks (DNNs) have successfully demonstrated superior performance on many tasks across multiple domains. Their success is made possible by expert practitioners’ careful design of neural architectures. This manual handcrafted design requires a colossal number of computational resources, time, and memory to arrive at an optimal architecture. Automated Neural Architecture Search (NAS) is a promising area to explore to overcome these issues. However, optimizing a network for a job is a tedious task that requires lengthy search time, high processor needs, and a thorough examination of enormous possibilities. The need of the hour is to develop a strategy that saves time while maintaining an excellent level of accuracy. In this paper, we design, explore, and experiment with various differentiable NAS methods which are memory, time, and compute efficient. We also explore the role and efficacy of self-supervision to guide the search for optimal architectures. Self-supervision offers numerous advantages such as facilitating the use of unlabelled data and making the “learning” non-task specific, thereby improving transfer to other tasks. To study the inclusion of self-supervision into the search process, we propose a simple loss function consisting of a convex combination of supervised cross-entropy loss and self-supervision loss. In addition, we carried out various analyses to characterize the performance of different approaches considered in this paper. The inspection of results obtained from various experiments on CIFAR-10 reveals that the proposed methodology balances time and accuracy while staying as near as possible to the state-of-the-art results.

-

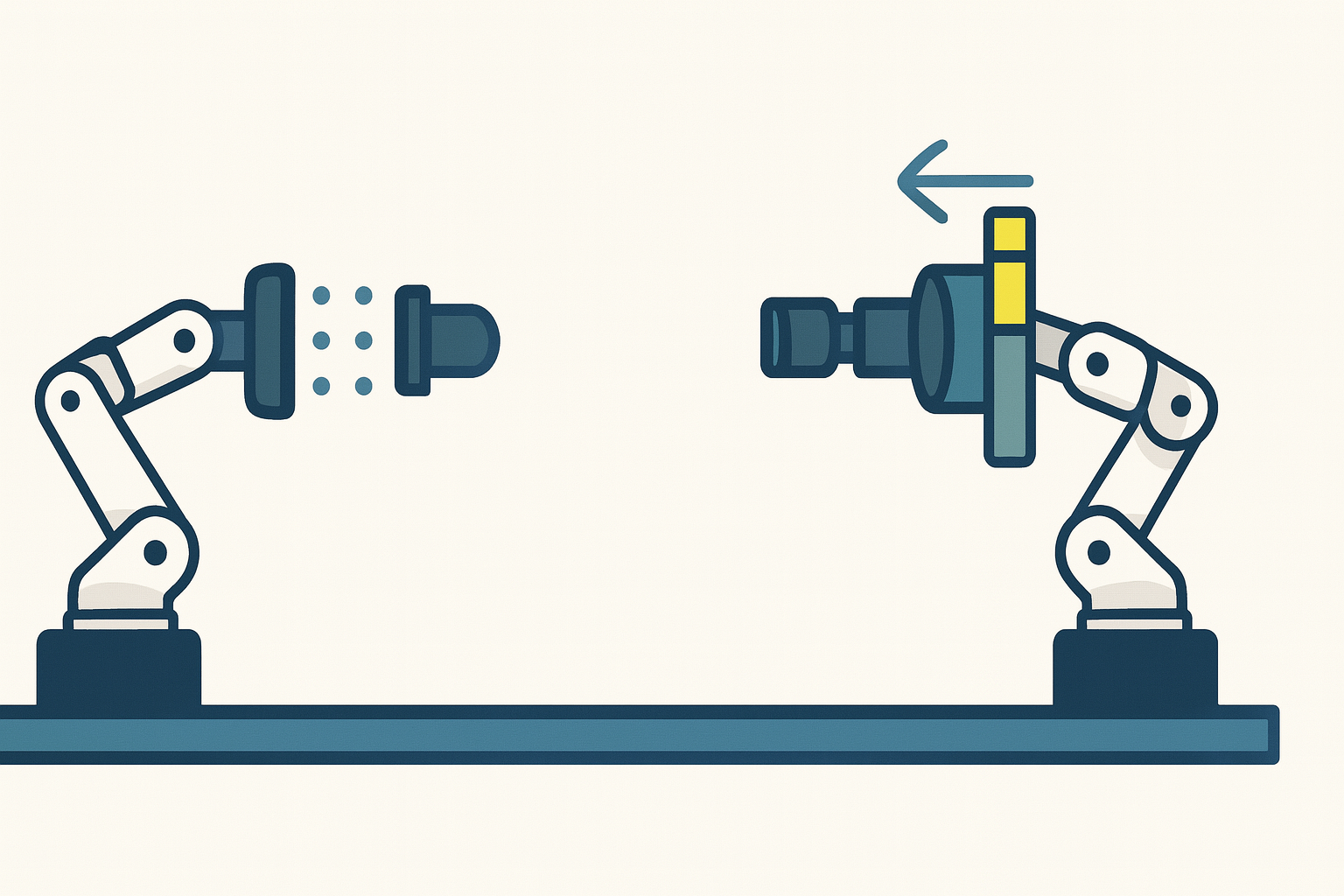

Laser Diode Motion Simulator: Extending the capabilities of Hardware-in-Loop Space Rendezvous TestingSri Aditya Deevi, Aakash Chaudhary, Samuel Lakkoju , and 3 more authorsAccepted in Advances in Robotics (AIR) Conference, Jul 2025

Laser Diode Motion Simulator: Extending the capabilities of Hardware-in-Loop Space Rendezvous TestingSri Aditya Deevi, Aakash Chaudhary, Samuel Lakkoju , and 3 more authorsAccepted in Advances in Robotics (AIR) Conference, Jul 2025In the realm of space exploration, the precision of spacecraft rendezvous operations is paramount. Traditional Hardware-in-the-Loop Simulation (HILS) facilities often encounter constraints due to limited track lengths, which can impede the accurate emulation of relative movements between spacecraft. To address this challenge, we have enhanced our HILS Space Rendezvous Robotic Testbed by integrating a Laser Diode (LD) Motion Simulator. The robotic testbed features two industrial robots—one equipped with photodetectors and the other with laser diodes—to replicate the relative motion between chaser and target spacecraft during proximity operations. To further augment this relative motion, the LDs are mounted on a motion mechanism. By physically moving the LDs, we can effectively simulate the dynamics of two spacecraft over extended distances without requiring large physical tracks. Preliminary experiments, encompassing both simulation and hardware implementations, have demonstrated the efficacy of this approach in overcoming spatial limitations and enhancing the realism of rendezvous testing scenarios.

-

Neural Radiance Fields in Space Applications: A Comprehensive ReviewAbraham Paul, Sri Aditya Deevi, Aakash Chaudhary , and 3 more authorsInternational Journal of Advanced Research (IJAR), Mar 2025

Neural Radiance Fields in Space Applications: A Comprehensive ReviewAbraham Paul, Sri Aditya Deevi, Aakash Chaudhary , and 3 more authorsInternational Journal of Advanced Research (IJAR), Mar 2025Neural Radiance Fields (NeRF) have emerged as a powerful deep learning technique, revolutionizing the representation and rendering of 3D scenes. Although originally developed for computer vision and graphics applications, the potential of NeRF is increasingly being recognized in space-related fields. This paper provides a comprehensive review of the applications, advancements and challenges associated with the use of NeRF in space exploration, satellite imaging and remote sensing. We begin by introducing the foundational concepts of NeRF, including its architecture, underlying principles and computational requirements. We then explore how NeRF has been adapted and applied to space-specific challenges such as high-resolution 3D reconstruction of planetary surfaces, the visualization of satellite data and the enhancement of space mission planning. Furthermore, we discuss the integration of NeRF with other cutting-edge technologies like machine learning, autonomous systems and real-time rendering, highlighting the potential for future breakthroughs in space missions. Finally, we outline thecurrent limitations and open research questions, offering insights into the future directions of NeRF in space applications. This review aims to serve as a valuable resource for researchers and practitioners exploring the intersection of machine learning, computer graphics and space science.

-

High Fidelity Hardware-in-Loop Simulation of Autonomous Spacecraft Rendezvous and Docking using Dual-Robot Platform on TrackRavikumar L., Samuel Lakkoju, Kartikeyan Bhanu , and 4 more authorsSubmitted in 2025 International Conference on Space Robotics (iSpaRo), Dec 2025

High Fidelity Hardware-in-Loop Simulation of Autonomous Spacecraft Rendezvous and Docking using Dual-Robot Platform on TrackRavikumar L., Samuel Lakkoju, Kartikeyan Bhanu , and 4 more authorsSubmitted in 2025 International Conference on Space Robotics (iSpaRo), Dec 2025In this paper, we present the Rendezvous Simulation Laboratory (RSL), a high‑fidelity hardware‑in‑the‑loop facility built around dual 6‑DoF robotic manipulators mounted on an 18 m linear track to emulate autonomous spacecraft rendezvous and docking. We demonstrate sub‑millimetre positioning accuracy and robust soft‑docking in closed‑loop tests under varying approach trajectories, lighting conditions, and communication delays. Finally, we validate navigation, guidance, and control algorithms for proximity operations in ISRO’s SPADEX mission through exhaustive ground verification and outline proposed extensions to RSL for future docking and on‑orbit servicing missions.

2024

-

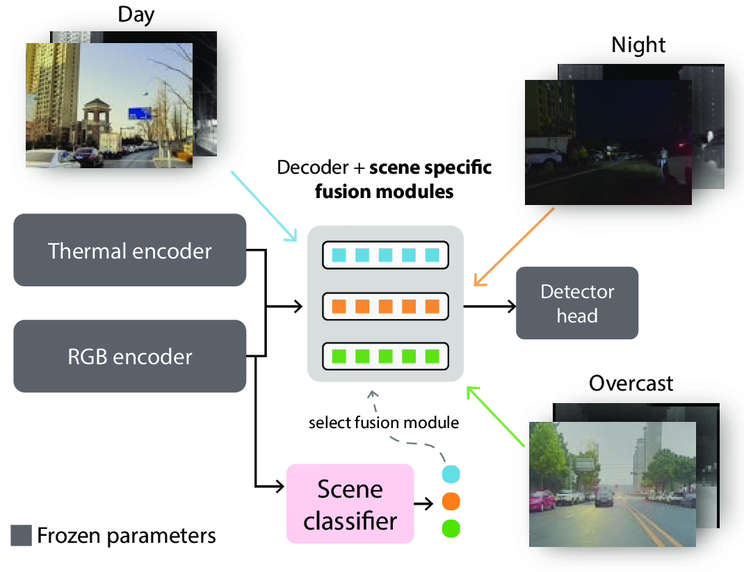

RGB-X Object Detection via Scene-Specific Fusion ModulesSri Aditya Deevi, Connor Lee, Lu Gan , and 3 more authorsProceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Jan 2024

RGB-X Object Detection via Scene-Specific Fusion ModulesSri Aditya Deevi, Connor Lee, Lu Gan , and 3 more authorsProceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Jan 2024Multimodal deep sensor fusion has the potential to enable autonomous vehicles to visually understand their surrounding environments in all weather conditions. However, existing deep sensor fusion methods usually employ convoluted architectures with intermingled multimodal features, requiring large coregistered multimodal datasets for training. In this work, we present an efficient and modular RGB-X fusion network that can leverage and fuse pretrained single-modal models via scene-specific fusion modules, thereby enabling joint input-adaptive network architectures to be created using small, coregistered multimodal datasets. Our experiments demonstrate the superiority of our method compared to existing works on RGB-thermal and RGB-gated datasets, performing fusion using only a small amount of additional parameters. Our code is available at https://github.com/dsriaditya999/RGBXFusion.

-

Effective Landmark Regression using Attention-based HRNet for Satellite Pose EstimationAbraham Paul, Anoop G.L, Ganesh Kumar R. , and 3 more authorsProceedings of South Asian Research Center (SARC) International Conference, Nov 2024

Effective Landmark Regression using Attention-based HRNet for Satellite Pose EstimationAbraham Paul, Anoop G.L, Ganesh Kumar R. , and 3 more authorsProceedings of South Asian Research Center (SARC) International Conference, Nov 2024For many space missions, it is important to estimate the position and orientation of the satellite for operations such as docking and debris removal. It involves the following stages of object detection, landmark regression and pose estimation. For objection detection, we used Faster-RCNN with HRNet as the backbone, the landmark regression part is done using AHRNet architecture, the pose estimation is implemented using PnP algorithm. Firstly, each image was labeled for object detection with bounding boxes around the satellite images created in Blender which were then used to train for satellite detection. An AHRNet was further trained for landmark regression using a 4fold cross-validation approach which involved splitting the dataset into multiple training and validation sets to enhance the Intersection over Union (IoU) metric. After the landmark regression provided a 2D projection of 3D ground truth points, the PnP algorithm was then used for pose estimation. To improve pose estimation accuracy, we integrated solvePnP with an iterator argument utilizing the Levenberg-Marquardt (LM) method to reduce noise and outliers. Our methodology significantly enhances the precision and efficiency of the translation and rotation error of the satellite during the docking processes offering a viable solution for autonomous space missions with potential for future improvements in domain adaptability through the development of unsupervised domain adaptation models. The results of the landmark regression using AHRNet shows an improved reprojection error, orientation and translation errors.

2022

-

Expeditious object pose estimation for autonomous robotic graspingSri Aditya Deevi, and Deepak MishraInternational Conference on Computer Vision and Image Processing, Nov 2022

Expeditious object pose estimation for autonomous robotic graspingSri Aditya Deevi, and Deepak MishraInternational Conference on Computer Vision and Image Processing, Nov 2022The ability of a robot to sense and “perceive" its surroundings to interact and influence various objects of interest by grasping them, using vision-based sensors is the main principle behind vision based Autonomous Robotic Grasping. To realise this task of autonomous object grasping, one of the critical sub-tasks is the 6D Pose Estimation of a known object of interest from sensory data in a given environment. The sensory data can include RGB images and data from depth sensors, but determining the object’s pose using only a single RGB image is cost-effective and highly desirable in many applications. In this work, we develop a series of convolutional neural network-based pose estimation models without post-refinement stages, designed to achieve high accuracy on relevant metrics for efficiently estimating the 6D pose of an object, using only a single RGB image. The designed models are incorporated into an end-to-end pose estimation pipeline based on Unity and ROS Noetic, where a UR3 Robotic Arm is deployed in a simulated pick-and-place task. The pose estimation performance of the different models is compared and analysed in both same-environment and cross-environment cases utilising synthetic RGB data collected from cluttered and simple simulation scenes constructed in Unity Environment. In addition, the developed models achieved high Average Distance (ADD) metric scores greater than 93% for most of the real-life objects tested in the LINEMOD dataset and can be integrated seamlessly with any robotic arm for estimating 6D pose from only RGB data, making our method effective, efficient and generic.

-

Data Summarization in Internet of ThingsSri Aditya Deevi, and BS ManojSN Computer Science, May 2022

Data Summarization in Internet of ThingsSri Aditya Deevi, and BS ManojSN Computer Science, May 2022With recent advances in the field of Internet of Things (IoT), the quantity of data being generated by various sensors and Internet users has increased dramatically which in turn has skyrocketed the need for efficient data compression methods. Data summarization is an efficient and effective technique for data compression that can generate a brief and succinct summary from typically larger quantities of data in an intelligent and highly useful manner, which can be done at various levels of abstraction. The impact of using such a technique in large IoT networks can be significantly advantageous in terms of reduction in the processing time, overall computation, data storage-transmission requirements, energy consumption, and possible workload on IoT users. In this work, a review of existing methods for data summarization techniques at various levels of abstraction of typical IoT networks are discussed. The levels of categorization that are considered are Low-level and High-level. Under each abstraction level, various techniques are further classified while briefly describing their essential characters.

2021

-

HeartNetEC: a deep representation learning approach for ECG beat classificationSri Aditya Deevi, Christina Perinbam Kaniraja, Vani Devi Mani , and 3 more authorsBiomedical Engineering Letters, Feb 2021

HeartNetEC: a deep representation learning approach for ECG beat classificationSri Aditya Deevi, Christina Perinbam Kaniraja, Vani Devi Mani , and 3 more authorsBiomedical Engineering Letters, Feb 2021One of the most crucial and informative tools available at the disposal of a Cardiologist for examining the condition of a patient’s cardiovascular system is the electrocardiogram (ECG/EKG). A major reason behind the need for accurate reconstruction of ECG comes from the fact that the shape of ECG tracing is very crucial for determining the health condition of an individual. Whether the patient is prone to or diagnosed with cardiovascular diseases (CVDs), this information can be gathered through examination of ECG signal. Among various other methods, one of the most helpful methods in identifying cardiac abnormalities is a beat-wise categorization of a patient’s ECG record. In this work, a highly efficient deep representation learning approach for ECG beat classification is proposed, which can significantly reduce the burden and time spent by a Cardiologist for ECG Analysis. This work consists of two sub-systems: denoising block and beat classification block. The initial block is a denoising block that acquires the ECG signal from the patient and denoises that. The next stage is the beat classification part. This processes the input ECG signal for finding out the different classes of beats in the ECG through an efficient algorithm. In both stages, deep learning-based methods have been employed for the purpose. Our proposed approach has been tested on PhysioNet’s MIT-BIH Arrhythmia Database, for beat-wise classification into ten important types of heartbeats. As per the results obtained, the proposed approach is capable of making meaningful predictions and gives superior results on relevant metrics.